By Andrew Beer

By Andrew Beer

The revelation that a key paper by Rogoff and Reinhart included errors in both coding and data highlights the need for investors and practitioners to periodically re-evaluate the assumptions and conclusions in frequently cited studies. In the factor-based hedge fund replication space, recently published white papers have cited two studies to support or question the underlying concept. First released in mid-2006, Jasmina Hasanhodzic and Andrew Lo’s seminal paper, “Can Hedge Fund Returns be Replicated?: The Linear Case” (hereafter, “Lo”) essentially laid the groundwork for the industry by concluding that a linear, factor-based model could successfully replicate much of the returns of various hedge fund strategies. On the other side of the debate, EDHEC’s Noel Amenc and colleagues published three papers over 2008 and 2009 which argues that factor-based replication was “systematically inferior” to investing directly in hedge funds.

With the added benefit of several years of live history, it is now clear that Lo actually understated the effectiveness of the strategy by failing to account for how survivorship in the hedge fund data would affect relative pro forma returns. Likewise, the more recent (2009) paper by Amenc et al., “Performance of Passive Hedge Fund Replication Strategies” (hereafter, “Amenc”) failed to include actual results from replication indices launched in 2007-08 that demonstrated conclusively that replication models had matched or outperformed actual hedge fund portfolios through the crisis. Furthermore, the results were undermined by inconsistent factor specifications which adversely affected the results. The following note expands on these two points.

Hasanhodzic and Lo, “Can Hedge Fund Returns be Replicated?: The Linear Case” (2007)

This important paper, first released in 2006, introduced the concept of using a 24 month rolling-window linear regression to replicate hedge fund returns. In many ways, this seminal paper launched the factor-based hedge fund replication business. Interestingly, though, the authors appear to have overlooked the most important conclusion:

- Using a simple five factor model, the replication of an equally weighted portfolio of 1,610 funds appears to deliver all or virtually all of the returns over almost 20 years, adjusted for survivorship bias.

In other words, the simple clone’s performance exceeded all expectations during the “high alpha” period of 1986-2005. Remarkably, this pro forma performance of the clone was approximately equal to the performance of the S&P 500 over the same period, but with materially lower volatility and drawdowns. This is a startling result that is lost in the paper’s forty pages of formulas, text and tables. Here’s why:

The data set used was based entirely on "live" funds in the TASS database as of September 2005 – 1,610 funds. Invariably, “live” funds have outperformed “dead” peers by a wide margin: in the HFR database, for instance, by more than 400 bps per annum. Inexplicably, the authors assert that “any survivorship bias should impact both funds and clones identically,” and therefore can be ignored. This simply is incorrect. We know today that this kind of data bias, by definition, is “non-replicable.” Therefore, the clone should be compared to a realistic measure of performance – i.e. adjusted for survivorship bias. This is why replicators are often benchmarked against indices the like HFRI Fund of Funds index that are more representative of actual investor returns.

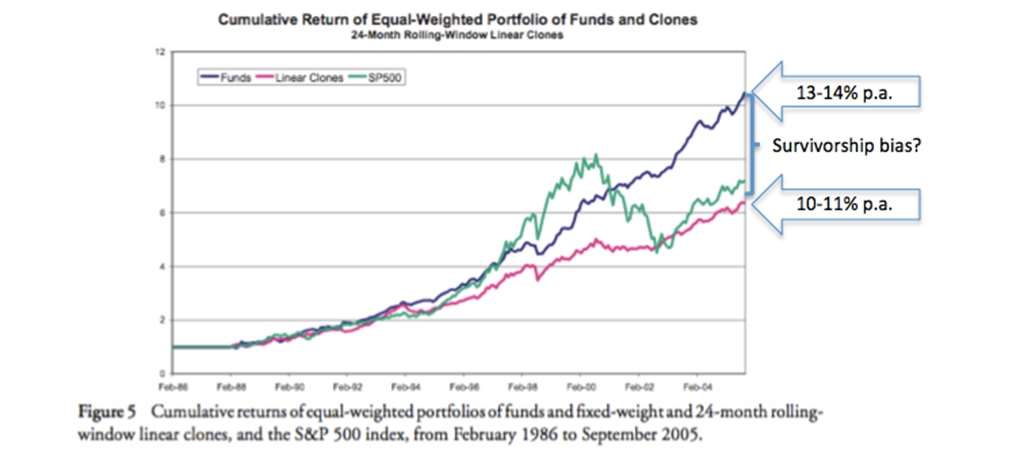

From Figure 5 in the paper, we can infer that the equally weighted portfolio of sample funds returned between 13% and 14% on a compound annual basis over almost twenty years. This clearly is unrealistically high: hedge funds as a group simply did not outperform the S&P by 200-300 bps per annum on a net basis during a twenty year bull market in which stocks returned 10% per annum. Assuming several hundred bps of survivorship bias, the hedge fund portfolio would have slightly underperformed the S&P 500, but with materially lower drawdowns and volatility. And, in fact, this is precisely how the simple clone performed. See Figure 5 reproduced below with commentary added.

In this context, the performance of the linear clone (around 10% per annum) is remarkable and should have been highlighted more prominently.

A secondary issue is the use of a factor set that is missing important market exposures. The study employs only five market factors: the S&P 500 total return, the Lehman AA index, the spread between the Lehman BAA index and Lehman Treasury index, the GSCI total return, and the USD index total return. More recent studies, including our own, have demonstrated that emerging markets, short term Treasury notes and small capitalization equities are important factors since they enable the models to incorporate, respectively, volatility expectations, yield curve trades and market capitalization bias. Conversely, while the inclusion of the GSCI has intrinsic appeal, it does not appear to be additive over time to out of sample results. Consequently, the overall results arguably would have been even more compelling with a slightly more robust factor set.

Amenc et al., The Performance of Passive Hedge Fund Replication Strategies (2009)

In response to the paper by Hasandhozic and Lo and the launch of several factor-based indices, EDHEC released several papers that were highly critical of the concept during 2007-09. In the first paper, "The Myths and Limits of Passive Hedge Fund Replication: An Attractive Concept… Still a Work-in-Progress," the authors seek to redo the rolling linear model employed by Hasandhozic and Lo, but apply it to the EDHEC hedge fund database. Since there is very little explanation of the underlying data, it is impossible to estimate the effect of survivorship bias or other sampling issues.

The more relevant paper was published in 2009, “The Performance of Passive Hedge Fund Replication Strategies.” It is difficult to read this paper without the sense that the authors, who are closely tied to the fund of hedge fund industry (and funded by Newedge), had a predetermined agenda. The end result is a paper that includes some very helpful analysis – for instance, that Kalman filters and non-linear factors don’t improve out of sample results – but whose conclusions are undermined by selective omission. For instance:

- Even though there was over two years of live data from replication indices that showed strong results with high correlation through the crisis, the authors neglect to include this and focus instead on re-doing the Lo analysis with the admittedly incomplete five factor set.

- When the authors do in fact acknowledge that Lo’s factor base should be expanded to include emerging markets, small cap stocks and other factors, they test each strategy with an unreasonably narrow subset of factors even though it was well established by this time that a more robust factor set was critical. This is discussed in detail below.

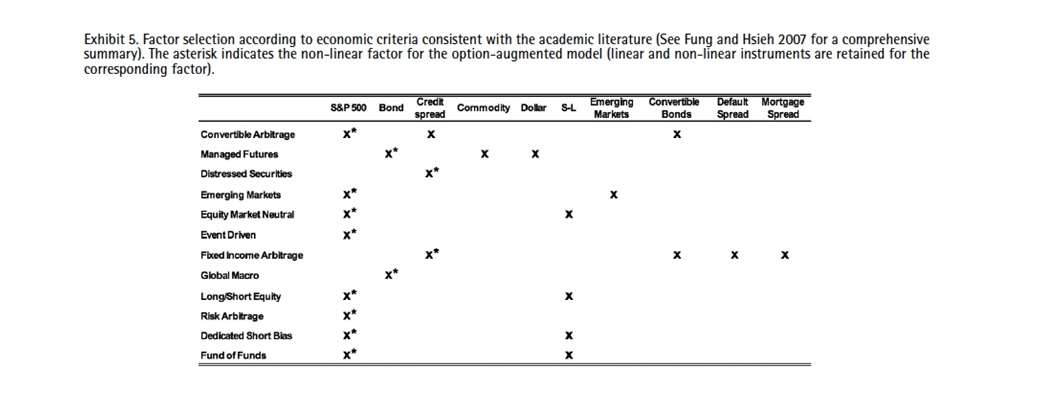

In Section 3.2, the authors “test whether selecting specific sets of factors for each strategy leads to an improvement in the replication performance. Based on an economic analysis and in accordance with Fung and Hsieh (2007), who provide a comprehensive summary of factor based risk analyses over the past decade, we select potentially significant risk factors for each strategy.” The factors identified are quite reasonable, such as the spread between small and large capitalization stocks, emerging markets, and other fixed income spreads.

In the table below, the five factors on the left side represent the original Lo portfolio, while the five on the right represent the Fung & Hsieh additions.

The logical next step would be to test whether the results of the Lo five factor set is improved by the addition of one or more of the factors. Instead, the authors only use 1-4 factors for each strategy and throw out most of the original factors. Remember that at this time it was well established that a narrow factor base was insufficient to replicate most hedge fund returns. This is why Merrill, Goldman Sachs and others all used 6-8 factors, not 1-4. To use one example, in order to seek to replicate the macro space, the authors used only the Lehman AA Intermediate Bond index – a single factor – with a 24 month rolling window. For distressed, the one factor is the spread between a BAA index and Treasurys. For risk arbitrage, it’s only the S&P 500. For long/short equity and funds of funds, it’s the S&P 500 and the small cap-large cap spread.

To underscore the point, the debate at the time was not whether one or two factors could reasonably replicate sector returns, but whether a diversified portfolio of market factors could do so. By starkly reducing the factor set, the authors essentially designed an experiment that was bound to fail. Consequently, investors should seriously question the validity of the authors’ conclusion that “the performance of the replicating strategies is systematically inferior to that of the actual hedge funds.”

Conclusion

Each paper covers a lot of ground and approach the topic from slightly different angles. However, the important point is that understanding the underlying assumptions is critical in order to accurately interpret the results. These assumptions – the survivorship bias issue in the Lo data and the reduction of factors in Amenc et al. – are lost in the paragraph-long abstract and buried in dozens of pages of analysis, formulas and tables. Without fully appreciating the limitations imposed by various assumptions, as investors we risk misinterpreting the validity and implications of the conclusions.